The Ethical Framework of Claude AI: Understanding Constitutional AI and Its Principles

In a world increasingly shaped by artificial intelligence, the ethical design and deployment of AI systems have become critical. Claude AI, developed by Anthropic, represents a new wave of ethically grounded AI technology. At the heart of Claude AI lies a unique model known as “Constitutional AI” which is designed to ensure safety, fairness, and alignment with human values. As tech-savvy professionals, policy researchers, and AI ethicists seek robust frameworks to guide responsible innovation, Claude AI emerges as a model worth deep exploration.

This article offers a comprehensive, SEO-optimized look into the ethical foundation of Claude AI. We will delve into the structure, guiding principles, and implications of Constitutional AI, explaining how it shapes Claude’s responses, behavior, and alignment with societal values.

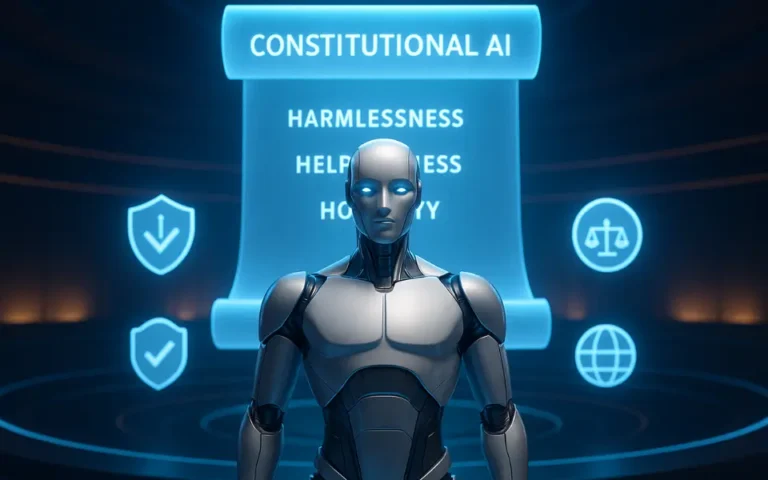

What is Constitutional AI?

Claude AI is a state-of-the-art conversational AI developed by Anthropic, a San Francisco-based AI safety and research company. Named after Claude Shannon, the father of information theory, Claude AI focuses on creating helpful, honest, and harmless AI interactions.

Overview of Constitutional AI

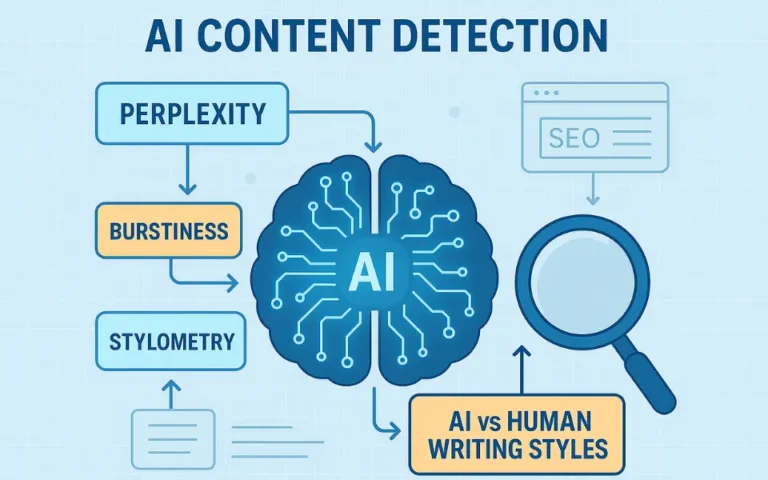

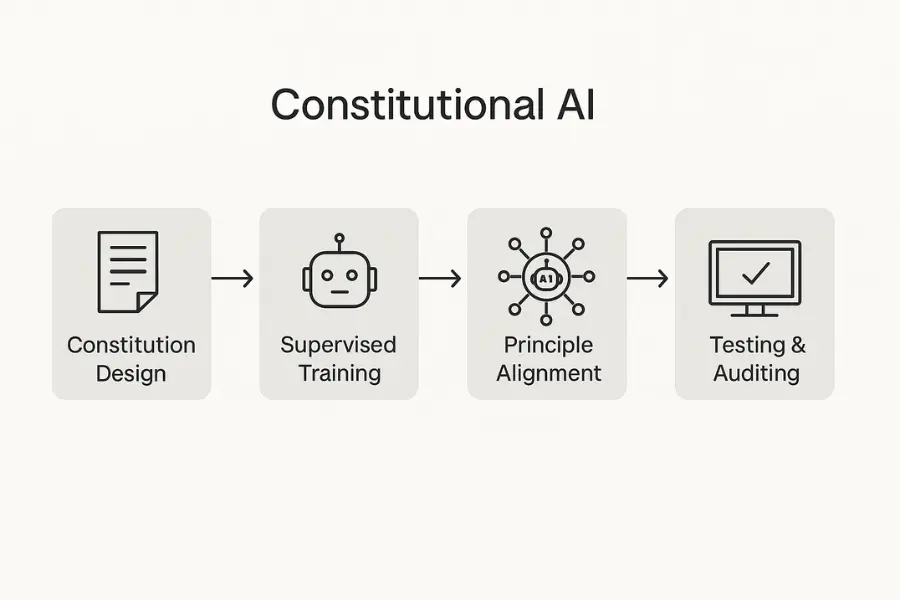

Constitutional AI is a machine learning training technique developed by Anthropic to align AI systems with ethical and safe behavior. Unlike traditional AI models that rely heavily on human feedback and reinforcement learning, Constitutional AI uses a written constitution to guide the model’s behavior.

This approach aims to reduce bias, increase transparency, and enable large language models (LLMs) like Claude to act in a way that is consistent with broadly accepted human values.

How Constitutional AI Guides Claude’s Behavior

Constitutional AI introduces a list of guiding principles that the AI uses to evaluate and adjust its responses. These principles are crafted to reflect human rights, non-maleficence, fairness, and transparency.

During training, the AI is instructed to prefer responses that align with these values, effectively internalizing ethical decision-making into its neural architecture.

Importance of Constitutional AI in Modern AI Ethics

With the rise of generative AI, there are growing concerns about misinformation, harmful outputs, and lack of accountability. Constitutional AI addresses these issues by embedding an ethical framework directly into the model’s training process, making AI systems like Claude more trustworthy and responsible.

Key Principles of Constitutional AI

1. Alignment with Human Values

Claude AI prioritizes responses that are helpful, honest, and harmless. Its built-in constitution reflects widely accepted ethical standards, aligning the model with core human values.

This includes refusing to provide:

- Instructions for illegal or dangerous activities

- Hate speech or discriminatory language

- Misinformation or unfounded medical advice

2. Transparency in Decision-Making

One of the hallmarks of Claude AI is its ability to explain its reasoning. By using a rule-based constitution, the model can provide users with more interpretable and transparent responses. Helpfulness is a core utility function of Claude AI.

It strives to:

- Provide accurate and relevant information

- Tailor responses to the user’s context and intent

- Support learning, decision-making, and productivity

3. Reduction of Bias

Constitutional AI seeks to minimize both overt and subtle biases that often affect machine learning models. This results in a more equitable and fair conversational agent that performs consistently across diverse contexts.

4. Safety and Robustness

Claude AI integrates safety directly into its core architecture. It actively avoids harmful, discriminatory, or misleading content while maintaining high standards of accuracy and usefulness. Designed for transparency, Claude AI consistently delivers truthful, ethical responses.

This means:

- Clearly stating when it does not know the answer

- Avoiding hallucinated facts

- Explaining its reasoning or referencing known sources

5. Accountability and Governance

Claude AI is structured to facilitate better auditability. Researchers and developers can inspect how and why certain decisions are made, improving overall trust in the system.

Benefits of Constitutional AI for Developers and Businesses

Safer User Interactions

Businesses leveraging Claude AI can offer:

- More reliable customer support

- Safer content generation

- Reduced compliance risks

Ethical Branding

Using Claude AI positions your brand as:

- Trustworthy

- Progressive

- Aligned with consumer data ethics expectations

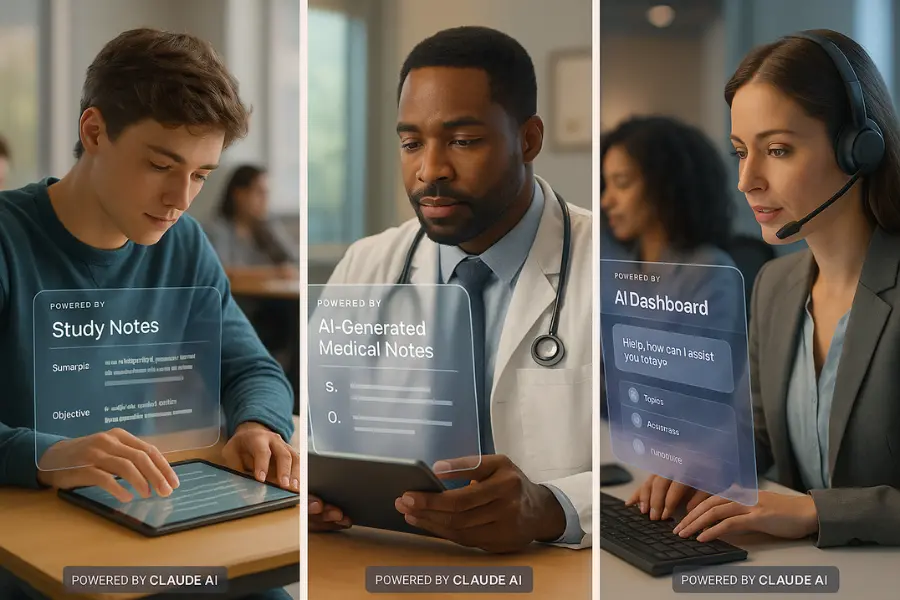

Education

- Assists students without enabling academic dishonesty

- Offers clear, age-appropriate explanations

- Supports inclusive, respectful language

Healthcare

- Provides evidence-based general health information

- Declines to offer diagnoses, instead guiding users to qualified professionals

- Prioritizes user privacy and emotional sensitivity

Business

- Answers FAQs ethically and accurately

- Avoids generating biased or offensive content

- Enhances user trust and satisfaction

Content Creation

- Helps writers brainstorm without promoting disinformation

- Assists with editing while preserving integrity and tone

- Supports creators with ethical storytelling tools

Comparing Claude AI with Traditional AI Models

Traditional AI models, such as those trained primarily on reinforcement learning and massive internet datasets, often struggle with consistency in ethical output.

Here’s a detailed comparison:

| Feature | Claude AI | Traditional AI Models |

|---|---|---|

| Ethical Training | Based on a clear written constitution | Primarily uses RLHF or unsupervised learning |

| Transparency | High – discloses limitations and reasoning | Moderate – often lacks explainability |

| Bias Mitigation | Integrated into the ethical framework | Post-training moderation or filtering |

| Response Consistency | Ethically aligned and context-aware | Varies based on input and context |

| Safety for Public Use | High – safe for education, enterprise, public platforms | Requires additional safeguards |

| Handling of Controversial Topics | Neutral, informative, and values-aligned | Risk of generating biased or harmful content |

Claude AI leads in areas of ethics, safety, and trust—key concerns for users and enterprises.

Most traditional models, like earlier versions of ChatGPT, use Reinforcement Learning from Human Feedback (RLHF). While effective, RLHF depends heavily on human reviewers and may not consistently reflect ethical standards.

Claude AI’s Constitutional AI approach, on the other hand, incorporates a set of guiding principles during both supervised and unsupervised learning stages. This results in more stable, principled, and predictable behavior.

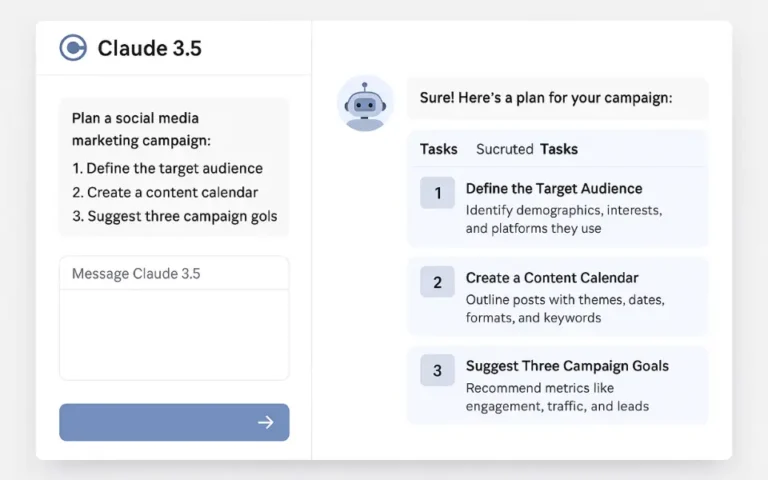

Use Cases Where Claude AI Excels

- Policy analysis: Safe for exploring sensitive topics.

- Corporate communication: Aligned and controlled tone.

- Healthcare AI assistants: Reliable for non-diagnostic support.

- Educational tools: Balanced and non-biased information delivery.

Comparison with Other AI Ethics Models

Claude AI vs. GPT-4 vs. Google Gemini: Ethics Compared

| Feature | Claude AI | GPT-4 | Google Gemini |

|---|---|---|---|

| Ethical Training | Constitutional AI | RLHF | Proprietary Guidelines |

| Transparency | High | Moderate | Low |

| Customizability | Medium | High | Medium |

| Bias Mitigation | Built-in principles | Human feedback | Automated filtering |

Claude’s constitutional model places it ahead in ethical transparency and consistency.

Applications of Claude AI in the Real World

1). AI in Public Policy

Claude AI is increasingly being used in public sector projects to draft policy documents, analyze regulations, and support research all while maintaining ethical boundaries.

2). Customer Service Automation

With its constitutional safeguards, Claude ensures polite, consistent, and non-offensive interactions, making it ideal for customer service automation.

3). Journalism and Content Creation

Writers and editors benefit from Claude’s unbiased, well-reasoned assistance when generating ethical content.

4). AI in Education

Claude AI helps design ethical learning materials, supporting teachers with responsible and clear content generation.

The Future of Ethical AI: Why Claude AI Sets the Standard

Claude AI’s structure allows for rapid updates and improvements without compromising ethical integrity. As AI governance frameworks evolve, Claude is positioned to meet regulatory and societal expectations due to its intrinsic safety features.

- Proactive Alignment with Human

- Ethical Intelligence by Default

- Industry Impact and Leadership

With global discussions around AI regulation gaining momentum, Claude AI offers a practical demonstration of how ethical principles can be operationalized at scale.

Challenges and Criticisms

Potential Limitations

While Claude AI sets a high bar, it’s not without limitations. Some critics argue that the constitution itself may reflect the biases of its creators. Also, the model may occasionally over-correct to avoid controversial topics.

Ongoing Research

Anthropic is actively working on improving the flexibility, transparency, and cultural adaptability of Claude AI. This ongoing research will be critical in making sure the ethical framework remains relevant across global contexts.

Conclusion: Claude AI and the Future of Ethical AI

Claude AI represents a significant step forward in building AI that doesn’t just work but works ethically. For professionals involved in AI policy, development, and governance, Claude offers a compelling case study in integrating ethical principles directly into a model’s architecture.

As society demands more from its technologies, Constitutional AI offers a roadmap for responsible innovation. Claude AI is not just a product—it’s a paradigm shift in how we design, interact with, and trust intelligent systems.

The ultimate goal of Constitutional AI is to build artificial intelligence systems that are naturally inclined to be helpful, harmless, and honest—by design. Unlike traditional methods that rely on strict external controls or moderation, this approach empowers AI to align with core human values and ethical standards from within. Rather than focusing solely on raw power or performance, Constitutional AI prioritizes real-world human benefit, ensuring that AI tools act responsibly, safely, and transparently across a wide range of situations.