Claude AI Prompt Caching: A Game-Changer for Faster, Smarter AI

As AI continues to power smarter tools and experiences, optimization techniques like prompt caching are transforming the way we interact with models like Claude AI. This innovative feature isn’t just a technical trick it’s a cost-cutting, speed-boosting, quality-enhancing tool that every developer and product team should understand.

In this guide, you’ll learn what Claude AI Prompt Caching is, how it works, and how to leverage it to make your AI workflows faster, cheaper, and more effective.

What is Prompt Caching?

Prompt caching is a feature in Claude AI that allows you to store (or “cache”) frequently used parts of prompts. When you send those same parts again, Claude reuses the cached content rather than reprocessing it from scratch.

This leads to:

- Lower latency (faster responses)

- Reduced token usage (which lowers cost)

- More consistent outputs (especially helpful in structured workflows)

Why Prompt Caching Matters

Prompt caching unlocks benefits across key areas:

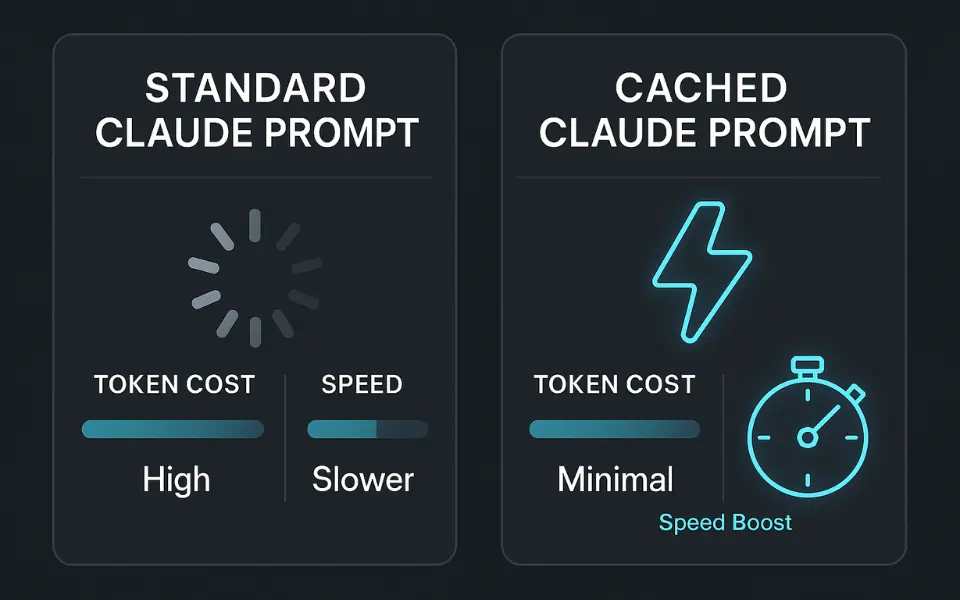

Performance

Cached prompts deliver responses up to 85% faster. This is crucial for real-time apps like chatbots or coding assistants.

Cost Efficiency

Cache reads cost as little as 10% of a normal input token. Imagine cutting your LLM bill by 50–90% on high-volume operations.

Consistency

If your app needs to reproduce results reliably like document parsing or logic tasks prompt caching ensures repeatability.

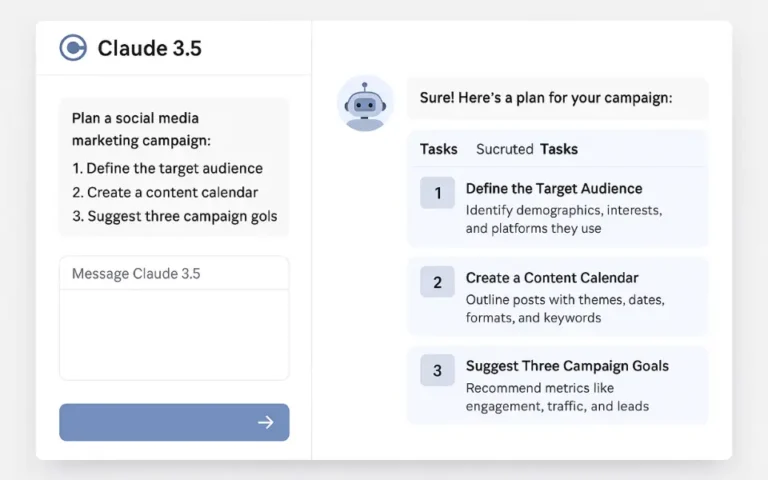

Use Cases Where Prompt Caching Shines

- Conversational AI & Chatbots

Cache long intros, personas, or context to keep multi-turn chats fast and cheap. - Coding Tools

Preload language rules or codebase summaries to support live coding help. - Document QA

Cache entire documents and answer user questions instantly, without re-uploading data. - Instructional Systems

Supply 100+ examples for few-shot learning once cached, they don’t slow things down. - Multi-Agent Systems

Tools that iterate or make multiple API calls benefit massively from cached context reuse.

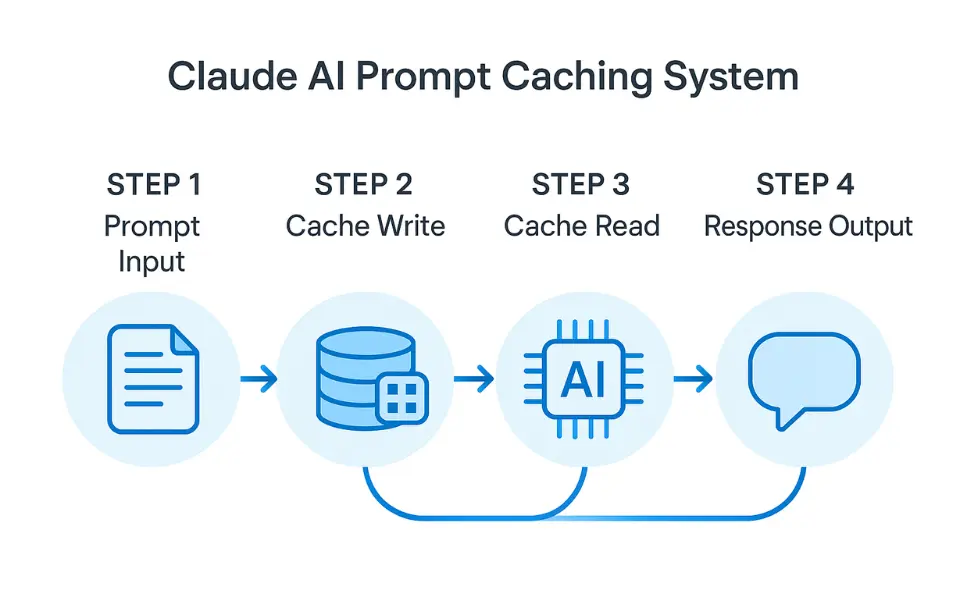

How It Works

Claude breaks down prompt caching into two simple operations:

- Cache Write: When you send a long prompt, the static portion is stored for reuse.

- Cache Read: Future prompts referencing that portion retrieve it instantly from the cache.

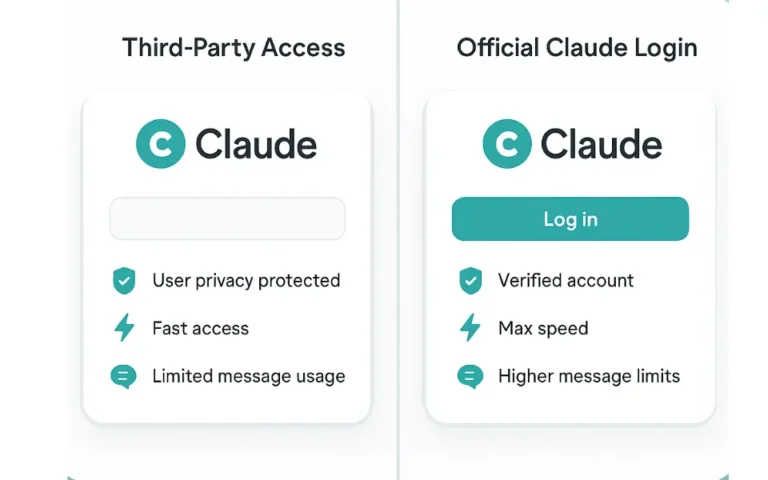

This is enabled via Claude’s cache_control API setting. You define the cacheable section, then just let Claude do the heavy lifting behind the scenes.

Caching Requirements & Token Limits

Claude models support prompt caching with the following minimum token thresholds:

| Model | Min. Tokens (Cacheable Part) |

|---|---|

| Claude 3.5 Sonnet | 1024 tokens |

| Claude 3 Haiku | 1024 tokens |

| Claude 3 Opus | 2048 tokens |

Keep static and reusable content at the beginning of your prompt, and use structured formatting to avoid accidental mismatches.

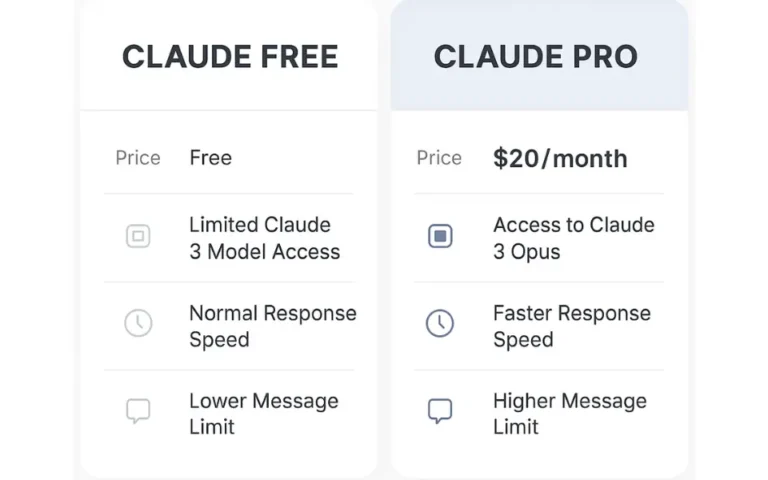

Claude Prompt Caching Pricing

Prompt caching makes financial sense especially at scale.

Here’s a breakdown:

| Model | Cache Write Cost | Cache Read Cost |

|---|---|---|

| Sonnet | $3.75/1M tokens | $0.30/1M tokens |

| Opus | $18.75/1M tokens | $1.50/1M tokens |

| Haiku | $0.30/1M tokens | $0.03/1M tokens |

Cache writes are a one-time cost, and the savings on reads quickly make up for it.

Best Practices for Prompt Caching

- ✅ Cache static and reusable instructions, context, or examples.

- 🚫 Avoid dynamic content (like timestamps or user-specific data) in cache blocks.

- 🧪 Monitor hit/miss metrics using Claude’s token-level telemetry.

- 🔄 Refresh the cache periodically if your context changes over time.

Real-World Example: Notion AI

Companies like Notion are using Claude’s prompt caching to power their AI assistants. By caching lengthy instructions and knowledge bases, they’ve cut costs and improved response times for users.

Final Thoughts

Prompt caching with Claude AI isn’t just an optional feature it’s a must-have optimization tool for anyone building scalable AI solutions. Whether you’re designing agents, document tools, or interactive assistants, caching can save you money, speed up delivery, and make user experiences more seamless.

If you’re serious about performance and efficiency, it’s time to make Claude’s prompt caching part of your AI stack.